Responding to post from @balajis: VERIFIABLE VIDEO We need verifiable video to prove that footage hasn’t been faked with AI…

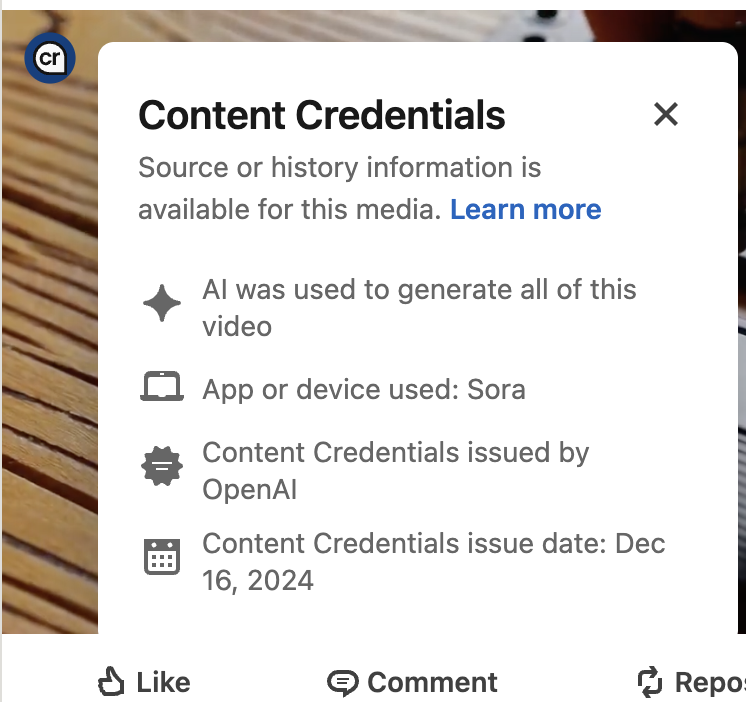

This is why I’m excited about C2PA (C2PA_org). At OpenAI, we are already attaching signed provenance metadata onto videos generated by Sora & images generated by ChatGPT. Social networks can parse and display this metadata in feeds – the attached screenshot shows how LinkedIn handles this today.

C2PA is different from approaches like watermarking/DRM/steganography. C2PA metadata can be trivially stripped or lost during transcoding/cropping by a platform that doesn’t respect C2PA. However, when preserved, it allows you to definitively prove that some media was emitted by a given AI model, camera, or other system.

Very optimistic about a future where we attach signed provenance metadata to all media, and learn to distrust any media without it.